June 2, 2021

Demystifying docker architecture for Beginners

Looking for work?

CHECK OUT TOPCODER DEVOPS FREELANCE GIGS

What is Docker?

Docker is a containerization technology which is mainly used to package and distribute applications across different kinds of platforms regardless of the operating system. By running “docker run” you can deploy and run a container seamlessly in your host. When you run the run command, Docker client will automatically pull the image from a Docker registry if the image is not already available in your host.

Developers don’t need to worry about whether the container is going to be deployed to a centos, ubuntu or Mac. As long as Docker client is installed on the host we are trying to deploy, it will be good to go.

Docker Use Cases

ENABLING CONTINUOUS DELIVERY (CD)

Docker builds are more easily reproducible than traditional builds. Docker images can be tagged which makes each image unique for each change, making implementing CD easier. When it comes to CD, there are standard techniques such as blue/green deployment where live and previous deployment will be maintained until the successful deployment and Phoenix deployment where the whole system is rebuilt on each release.

REDUCING DEBUGGING OVERHEAD

We all experience debugging in our career at some point. Missing libraries, problematic dependencies, unproducible bugs, etc. Docker allows you to code the dependency steps you need to run your program in a concise manner. In a traditional environment we often run into environment-specific issues (“in dev it is working!!”), but by using Docker builds we can ensure our application builds will be identical in all the environments, ie: dev, staging and production. This makes the devops engineer’s life easier for troubleshooting production issues, as well as the developers who are working on dev or staging stacks.

ENABLING FULL-STACK PRODUCTIVITY WHEN OFFLINE

Since you can bundle your application in Docker containers, you can do the development by orchestrating the containers in your local host and work offline if required.

MODELING NETWORKS

You can spin up hundreds, even thousands, of containers on one host in several minutes. This approach enables us to model a network with little effort and allows us to test real work use cases without spending a lot of money out of our pockets.

MICROSERVICES ARCHITECTURE

Traditional applications follow monolithic architecture. But nowadays many companies have started to design their applications using service oriented architecture (SOA) or microservice architecture. In microservice architecture each service has specific responsibilities.

By using Docker each service can be deployed as a Docker container. This approach helps developers to focus on specific services without interrupting others. It also allows developers to manage deployment without affecting the whole system.

PROTOTYPING SOFTWARE

Spinning up containers and deploying to the server will only take several minutes so you can quickly experiment with your ideas/features without worrying about affecting the whole application.

PACKAGING SOFTWARE

Docker can be used to package applications efficiently. Because a Docker image has effectively no dependencies for a Linux user, developers can build the image and be sure that it can be run on any modern Linux machine. For example, Java, without the need for a JVM.

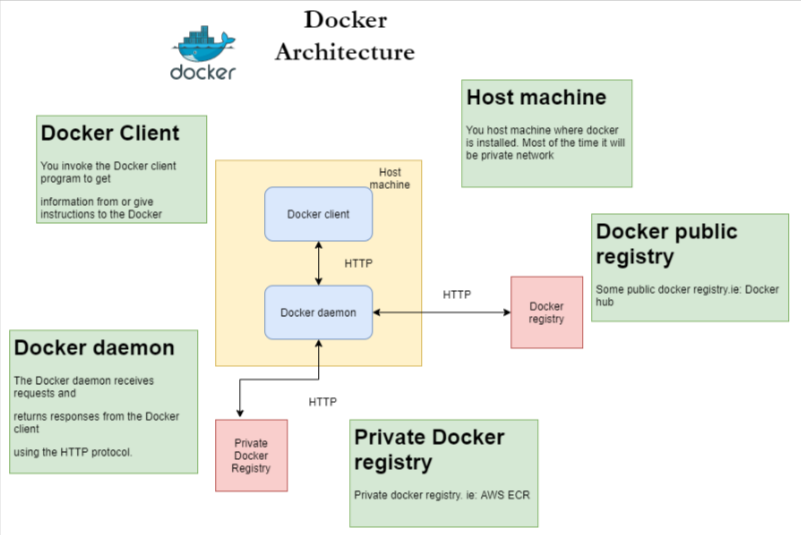

Docker Architecture

Docker Daemon

The Docker daemon is where all of your Docker communications take place. It manages the state of your containers and images, as well as connections with the outside world, and controls access to Docker on your machine.

A daemon is a program that runs in the background and is not under the user’s direct control. A server is a program that receives requests from clients and executes the required actions to satisfy those requests. Daemons are frequently also servers that accept client requests to conduct actions on their behalf. The Docker daemon acts as the server, and the Docker command is the client.

Docker Client

Docker client communicates with Docker daemon using HTTP requests. Client is a simple component in Docker architecture. When you run a Docker run command, the client speaks to the daemon via HTTP request and pulls the image to your host.

Docker Registry

Similar to source code repositories, in the Docker world we have a concept called Docker registry. Once you build your Docker image, you may need to share it with the world. For that we can use registry. Docker registries can be private, public or public but accessible only to those who registered with Docker. But, they all perform the same function with the same API.

Docker hub is a well known public Docker registry, but private repositories can be created in Docker hub. Similar to Docker hub, AWS has ECR, which is fully private.

Docker registry allows users to push/pull images using RESTful APIs. Some companies create private registries in their on-premise environment.

Deploy Sample Hello World App

Deploying apps using Docker is very easy and straightforward. As a first step Docker must be installed and running in the host machine. If Docker is not installed and configured to run on your machine, you can follow the official documentation to download and configure.

Once you configure the Docker client you simply run the following command to execute the hello world program.

$ docker run hello-world

Example Output

Hello from Docker!

When the command is executed, Docker takes following steps:

The Docker client communicates with the Docker daemon via HTTP request

The Docker daemon will pull the latest hello-world image from Docker hub

The Docker daemon creates a new container from that image

The Docker daemon pushes out the message to the console

Reference

Docker in Practice by Ian Miell, Aidan Hobson Sayers

Looking for work?

CHECK OUT TOPCODER DEVOPS FREELANCE GIGS